Mapping the AI & Architecture Landscape

In this web-essay I am trying to map out the different types of AI being used in the Architecture industry. There are a wide variety of tools being developed & used by Architecture professionals. I hope to in this web-essay to categorise them in a way that makes this landscape more understandable. In the same way the early explorers in ‘The Age of Discovery’ helped make the world beyond these shores better understood.

The reason for this web-essay is because of the excitement and trepidation some of these tools have generated in the industry. MidJourney in particular produces outputs that can be very intimidating for young and old professionals alike. But when you understand how these tools produce their output and what type of output they can generate, it helps put things in perspective.

They are definitely going to change how Architecture is designed and thus it prudent to learn more about them. There is a definate trend in the increasing use of computation to assist the design, documentation and construction of Architecture. A.I. is just another step along the same path that started with CAD, then BIM and now A.I.. These tools are vital to learn about to stay relevant in today’s market.

The Two Types of AI Technology

There are two distinct technolgies used to create these AI tools. They tend to have two very distinct looking outputs and they arrive at their outputs in two completely different ways. These are; 1. Machine Learning from examples. 2. Classical AI created by writing insturctions in code for the computer to excute.

What is Machine Learning?

Machine Learning also called Generative AI is a group of different AI tools, whose abilities are created by training a computer on very large numbers of examples of a type of object and then they are then able to create a new example of that type of object. This is why I refer to this type of AI tool as ‘Machine Learning from Examples’. If you train one of these systems on a million pictures of cats they can produce a new picture of a cat.

There are different types of Generative AI tools, as classified by what they were trained on and what type of output they can produce. The main types of Generative AI tools that are out there in the public arena include; Text Generators, Computer Code Generators, Image Generators, 3D Model Generators, Video Generators and Action Generators. There are sub categories of each in that list.

Image Genertors are trained on billions of images of all different subjects and when given a text ‘prompt’ are able to generate a novel image matching that prompt. Likewise ChatGPT has been trained on billions of lines of text and when ‘prompted’ can produce appropriate text in response.

They are very powerful but in essence these tools are ‘guessing’ not ‘thinking’ like a human and so they tend to make mistakes or have errors. The output of all generative AI tools needs to be checked manually by a human before being used in a professional setting.

Machine Learning is powered by big data, it is not possible without it. It’s abilities are greatly increased by the size of it’s training dataset. There are massive datasets of Architectural BIM models that have been collected over the years which are being used now to train ‘Machine Learning from examples’ systems.

So can you train a Machine Learning model on a million Hospital Architectural BIM models and ask it to create a new Hospital BIM model for you? Yes but I think the results might not be what you hoped. These machine learning systems are ‘guessing’ and they are not likely to guess at the correct Hospital BIM model that matches your site. But as we will see next Classical AI might produce outputs that are more useful.

Machine Learning from Examples – Types (Click here to expand to view videos)

Machine Learning from examples – Generative AI – Text Generator

Large Language Models like ChatGPT and Google Gemini can be extremely useful to Architecture professionals. You can use them to help draft documents such as BIM Execution Plans (as seen in video above). You can also use them to write emails or business adminstration letters and documents. These Gen AI text generators make stuff up and have factual inaccuracies so anything produced by them needs checking by a human.

Machine Learning from examples – Generative AI – Code Generator

In the example Video above I use a Large Language Model to write Python code that can be used in Rhino3D. These tools can pretty much write any type of programming langauge code and sometimes it works without any need for a human to debug it. For those people already familar with writing code to automate tasks in the Architecture world this ability to generate code using Large Language Models is extreamly useful and time saving. They are guessing and not thinking so they make mistakes. They have been known to make up fake API calls so they do produce code that can not run.

Machine Learning from examples – Genertaive AI – Image generators

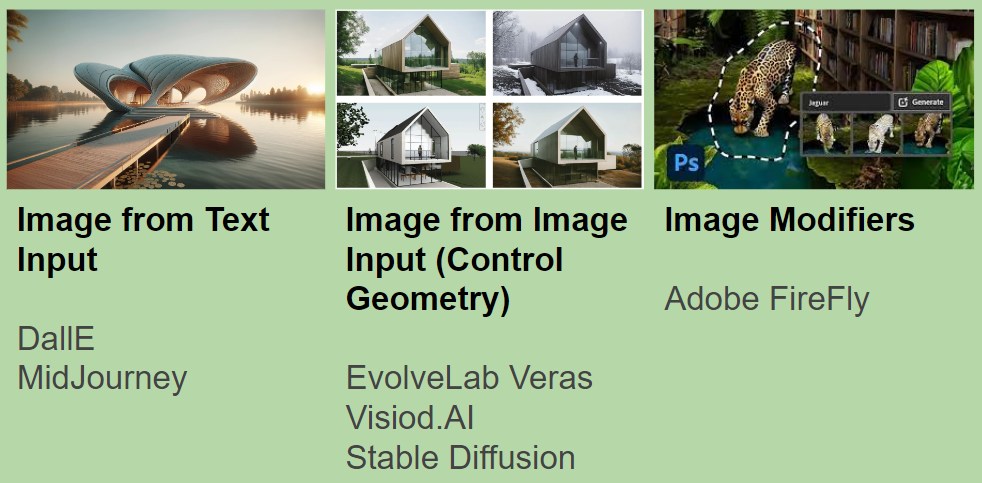

The three most common ways to us Gen AI to create Images are: 1. From text input (left) 2. From control image/geometry + text (middle) 3. Image modfiers which allow you to edit an image with AI (right).

In the video above I have used ChatGPT/DallE to create some inpiration images for a timber clad cabin.

Machine Learning from examples – Generative AI – Using Image and design inspiration

One of the images generated by the AI became an inspiration image for a simple Rhino3D design model that I will use to demonstrate other types of Gen AI. Using AI tools like DallE and MidJourney early on in the design process is a great way to incorporate these tools into your workflow.

Machine Learning from examples – Generative AI – Image from control geometry

In the video above I show how you can use a simple blocky version of a design concept and quickly generate photorealistic renders of the building. In the video I use Visoid tool but another great tool to use is EvolveLab Veras from EvolveLab.

Machine Learning from examples – Generative AI – Image from Text input limitations of these tools

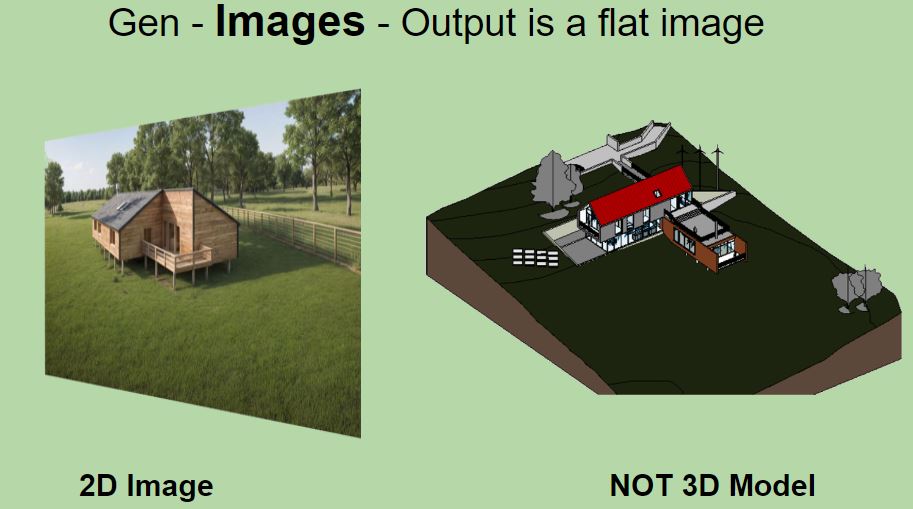

The first key fact is they produce flat Images not a 3D models. Though you can create some cool pictures from Text input. They are flat images and it is mainly a manual process to make a 3D model from them.

But are they really good designers? Below I asked DallE to produce a design for a new Norman Foster tower in London. Is it a good design, what are the wavy bits on the side? Are they corner office pods. When it is just guessing is it going to get a good answer to the real problem. Or are they just useful for inspiration.

Below are some images created from text inputs. You will see there are mistakes in them. The bedroom in the red circle has no door into it.

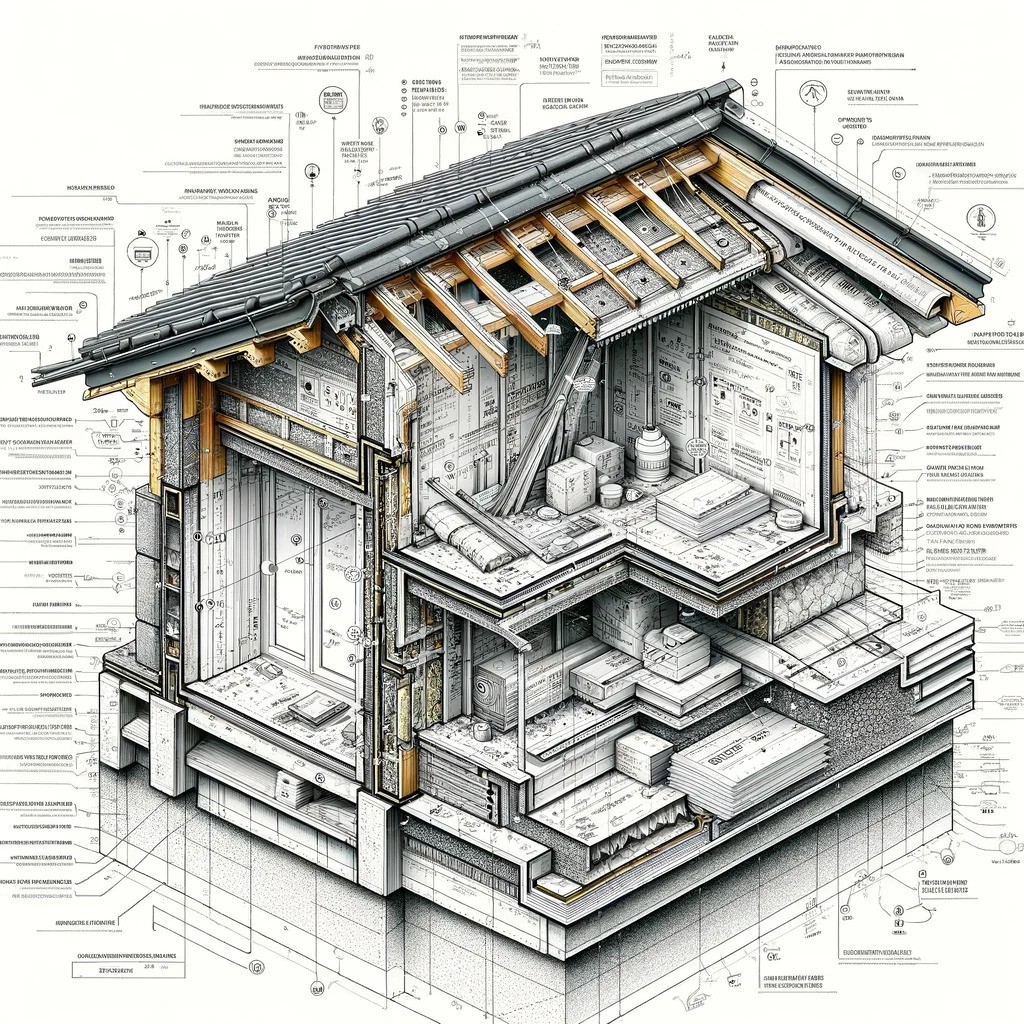

It can not generate architectural details either. This drawing is confused and not helpful to solve a detailing problem.

And it is no use at generating novel diagrams for passively heating, cooling, ventilating and lighting a building. Though this looks cool it is not useful for solving the architectural problem of how to passively cool a building.

Machine Learning from examples – Generative AI – 3D model from a single image generator

Once you have an image generated by AI. You might want to turn this flat image into a 3D model. In my opinion this is still a manual process but there are tools out ther like Meshy.ai that can try and convert your AI image into a 3D mesh. As you can see they have limited abilties. However they produce great outputs for other industries such as the computer games industry. In that sector they are creating great 3D assets for computer games and I think they were designed to serve that industry not so much Architecture.

Machine Learning from examples – Generative AI – Creating a video from an AI image

Another cool trick AI can help you with is to turn still images into animated clips. But you will notice the more movement that is created the more errors are produced. This is not the same process as doing a fly through your Revit model where the geometry stays consistent as you move through space. What these AI tools are doing are guessing what it might look like from that angle not calculating it, like what happens in fly throughs in Revit. Revit is more like Classical AI than Machine Learning methods seen here in RunWay.

Machine Learning from examples – Generative AI – Large Action Models (Copyright Swapp and EvolveLAB Glyph)

Everytime you do something on your computer, a piece of software or your phone, your interactions are recorded and used to train Large Action Models. Soon when you work in software like Revit, the computer will be able to guess what you might do next and then provide that as the selected tool. What the Swapp tool is doing in this video is from a database of Revit models with drawings is having a guess what the suitable drawings and views need to be created to accompany that shape of building. It is able to do this because it has been trained on millions of Revit models and drawing sets.

The Rabbit AI product uses datasets of peoples interactions with their phone to be able to produce actions from verbal instructions.

Another firm making use of Large Action Models for automating tasks in AEC software is EvolveLab, their tool called Glyph automates various task in Revit. Please see animated Gif below for more information.

What is classical AI?

Classical AI tools or systems are created using computer coding and sometimes can produce outputs rivalling Generative AI though in general they have a different feel. Classical AI is created by writing instructions in code to be executed by the computer.

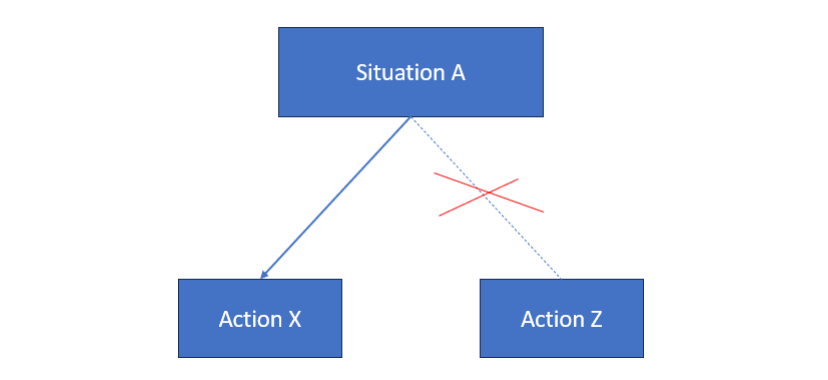

In the image below is a simple explanation of these systems. They are manually coded to perform certain actions given certain situations. In Situation A perform Action X. So for example if the site is 50 meters wide then generate two rows of buildings on the site.

Classical AI tools use logic to generate their output from input specified by the human creating them. The logic they use has to be written down for them by the human creating this system. When designing you use logic to arrive at some solutions used in the design and sometimes you guess, like a Generative AI tool does. This is why I have divided these two types of AI into two groups because they seem to be similar to types of mental activity we do when we design and because to create these AI tools, two different types of technology are being used. These are ‘Machine Learning from examples’ and writing instructions in computer code.

Classical AI – Types (Click here to expand to view videos)

Classical AI – Visual Programming example of functionality

Visual Programming is for most Architecture professionals their first step into harnassing the power of computation to design Architecture. I find it is very powerful and intutitive to use. The main two tools out there are DynamoBIM and Rhino/Grasshopper. Please see my blog for more information about these tools and what they can do. They have been around for many years now and they have already created a new style of Architecture that you probably have already seen.

Classical AI – Text based coding example of functionality

Coding can be used to create complex geometry and automate tasks in software programs. I find it is best suited to automating tasks. In the video above I label all the glazed panels with a text note of their area. I used an AI code generator to create the code that automates this tasks. Showing how these two types of technologies (Machine Learning & Classical AI) are being combined to create the desired outcome. In Architecture the two most common Text Based programming languages being used are Python and C#. PyRevit is a great tool for those using Revit and wish to customise using Python.

Classical AI – Custom / Proprietery Software generic example of functionality

There are some really powerful pieces of custom software out there like TestFit & ARCHITEChTURES that can generate pluasible building designs given an input of a site boundary. The generic way they work is seen in the video above. A person is required to input a site boundary or building location for the software to generate a building or site layout. They are very exciting looking but they tend to produce ‘generic’ style building proposals. However they are probably the best hope for overcoming the ‘productivity’ problem in the Architecture industry. And as I say later in this essay they are the way of decoupling the quality of Architecture being produced from the skills and knowledge of a single architecture professional designing it. The code that creates the building is created by many people and thus will produce a better output that a building created by single designer. Creating buildings by coding will produce system wide gains. TestFit has already had buildings built based on the output of their highly complex Classical AI system.

Human and Machine Analogues

It is my theory that these two different styles of AI are infact analogues of the different styles of thinking that we do when designing. So when you think purely of the shape of the building you are using your intuition to guess at what will look good in the site location. This is similar to how Generative AI generates images. However when you sit down with a ruler and try to work out how to fit the different spaces onto the site, this is similar to how Classical AI systems create their output by following instructions and rules.

Self driving cars and architecture by code

At the current time the majority of buildings are designed in a standalone process similar to the way cars are driven by individual drivers. That is to say the quality of the way the car is driven or the way the building is designed is down the skills and knowledge of the individual driving the car or designing the building.

At the moment there is a great effort being made to create self driving cars. If this is successful and we get self driving cars it will bring a major change to saftey on the road. If a single self driving car was in a car accident and that accident was found to be caused by a problem in the code that controls them. Then the code could be fixed in way a single driver could recieve further training to improve their driving, if they have an accident. However the way it is different and better is when you improve the code for the self driving car it applies to all the cars out there. They can learn from each other and make imrpovements system wide. This is different with humans where only the individual involved in the crash learns from the mistake not other drivers.

How is this applicable to Architecture? Well with the increasing use of coding to design buildings. Soon the system wide gains brought about by sharing code or snippets of code will help Architecture improve on system wide style. Creating buildings using code will mean that the limitations on how much a single Architectural professional can learn or the skills they can develop will no longer be a limiting factor. Like for self driving cars they will learn from each other and improvements will occur system wide.

Main Take-Aways from the web-essay

- There are two types of AI being used in Architecture

- 1. Machine Learning from examples. Being trained on large datasets. That can generate new examples of the examples they have been trained on, either text, actions or images.

- 2. Classical AI created by writing instructions in code for the computer to execute

- They produce different types of outputs and can be used in different ways.

- Machine Learning needs a lot of human effort and imagination to create a ‘build-able’ output from what these systems generate.

- Classical AI produces more ‘build-able’ outputs but less futuristic looking.

- The two different styles of A.I. are analogues of two different human mental processes we use when designing.

- When Architecture is designed by using computers more, then there will be system wide gains and the result will not be limited by what a single designer is able learn in their career but will designed using collective intelligence enabled by sahring code.

- These new tools are more like Co-Pilots than competitors.

Conculsions and Predictions

At the moment there seems to a clear distinction between the two different methods to create AI tools. These are using Machine Learning from examples or writing insturctions in code to create complex systems. And most tools use only one type of technology to generate their output. But I predict in the future that new tools will use a combination of these two types of technology. These new blended tools will be very powerful and will be competition to and co-pilots for human actors.

The tools currently on the market still need lots of human creativity, guiding and effort to turn their outputs into physical buildings.

AI image generators are great for inspiration but they can not produce 3D outputs, they can not solve detailing problems, develop novel strategies for passively lighting and cooling buildings or planning of spaces problems.

‘Blended tool’ example (Machine Learning/coding) – D.TO: Design TOgether

Design TOgether are creating a Revit Co-Pilot using both machine learning and coding. Their tool will assist you with creating constuction documentation. They have used coding to create a Revit plugin and Machine Learning to power it’s advanced features.

D.TO’s vision is to transform the world of AEC through human-centric A.I. technology and to empower every design professional to work smarter for high-performance building design. D.TO’s strategy is to build an innovative platform which enlivens current inefficient workflows of building assembly design with interactive machine learning implementations.

Recommendations

- In my opinion you could be using MidJourney for design inspiration and early client discussions.

- You could be using tools like Visoid and EvolveLAB Veras to turn blocky concept models into realistic renders, faster that traditional processes. Don’t use these renders for Planning drawings (They may have unitentional features).

- You could be using tools like DynamoBIM or Rhino/Grasshopper to create complex geometry and to overcome project bottlenecks.

- Use ChatGPT to help draft documents and emails. Beware they make stuff and get stuff wrong, they are guessing not thinking and thus their output needs to be checked by a human.

- Use ChatGPT to help you write text based code to used in your BIM software to solve complex problems more efficiently.

- Explore the use of emerging ‘Proprietary Software’ for massing studies or site optioneering studies, such as TestFit & ARCHITEChTURES.

- Explore use of Large Action Models (Swapp & Evolve LAB Glyph) to Automate Documenting the design drawing production stage.

Additional Video Examples of Classical and Machine Learning AI (Click here to expand and view videos)

Machine Learning from examples – Generative AI – Image from control geometry

Generative AI example of Image from Control Geometry. Initial model created in Rhino3D and then the render was made in Visoid.AI.

Machine Learning from examples – Generative AI – Hand sketch to render example video (Visiod.ai copyright)

In the video below I use an AI renderer tool called Visiod to generate a realistic looking image from a handsketch.

Machine Learning from examples – Generative AI – ChatGPT Richard Rogers House and drawings

In this video I used ChatGPT DallE to have a go at generating a render and some drawings of a Richard Rogers house in a dessert. Please note how the plans and other drawings do not match the first render image. It can not create 3D consistent images. This is because it is not being driven from 3D content in the background like Revit or other BIM software.

Classical AI – Examples of Custom Software

In this video I show two different pieces of custom software created by writing computer code. They are TestFit which is great and they have already had real buildings built. Then the second one is called ArchitechTures their tool also looks very interesting.

Classical AI – Interacting with a Visual Programming script

In the video below you see the roof and supports of a 3D walkway change shape when the location line of the walkway is moved about. This demonstrates how the geometry of the walkway roof is created ‘procedurally’ by the visual programming script.

Classical AI – Visual Programming – Genetic Algorithm Solver

Below is a simple attempt by myself to create a site layout generator in Grasshopper. I used the Genetic algorithm solver galapagoes to try and maximise the size of the carpark.

Thanks for reading from Simon MCIAT (Director of SJM Studio Leeds).

Please contact me for an CPD, in person or online.