In my opinion there are two distinct types of AI being used in the Architecture and Construction sector. These are 1. Classical AI systems that use ‘hard coded’ rules to control behaviour & 2. Machine Learning Models which can generate new versions of examples it has been trained on. In this article I will disscuss the different ways they are being used.

1.What are classical AI systems?

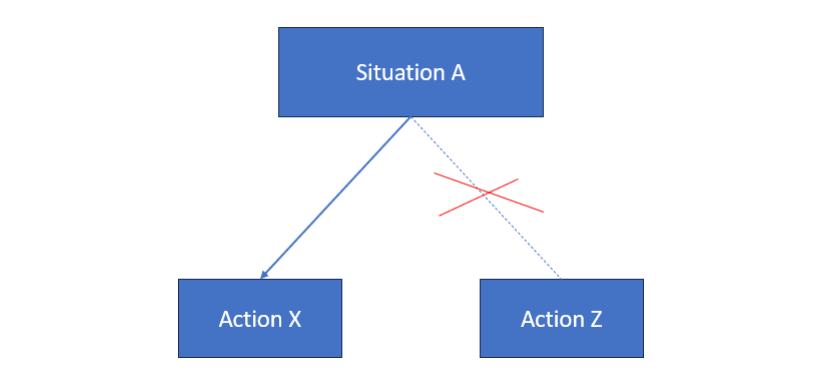

These are systems where the behaviour of the system is determined by rules ‘hard coded’ into the system. For example it is like this ‘in situation A do X, then in situation B do Y’.

These classical AI systems are created using computer code. They can be created in languages like Python, C# or visual programming languages like DynamoBIM and Grasshopper3D. The person writing the code for these AI systems controls how the systems behaves in different situations. They are very time consuming to create because all scenarios have to be thought about and rule controlling the response then has to be written down in the code.

2.What are Machine Learning or Generative AI systems?

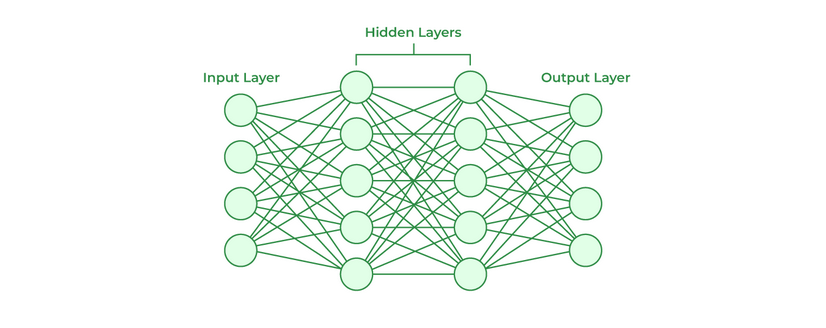

These systems are powered by BIG data, that is millions of images or millions of books. The behaviour of these systems is not controlled by code written by a human in the same way as classical AI is. Instead the Machine Learning models are shown millions of examples of something and are then able to produce a new version of the same thing. They are sometimes called Neural Networks or refered to as Generative AI or Machine Learning Models.

BIG Data and predicting the future

Big data is what is powering the Generative AI revolution. There are various AI tools such as text and image generation tools. There are also tools that generate predictions of crimes, such as those used by Law enforcement.

Say for example there was a mugging at the end of your road once every six months. It would be sensible to assume that in the next six months there will be another mugging. This is because the road network does not change much, maybe there is poor lighting or a bad area near-by.

The police have kept detailed records about every reported crime that has taken place over the last few decades. This includes type of crime and where and when it happened. This BIG amount of data allows them to make predictions about where future crimes will maybe take place. It happens that that police cars are sent to locations where the machine learning model has predicted where a crime will take place. How is this so? Well it is because they have been trained on all the BIG data and then they are able to create a new potential crime in the series of crimes that have happened before that matches the patterns in the crime data record training data.

The Machine Learning model can generate a new potential crime based upon it’s training data. Like ChatGPT creates new text or MidJourney creates new images.

How do Machine Learning Models like ChatGPT or Google Bard work? Loki the Viking or MCU Superhero?

If you type the prompt ‘Tell me about Loki’ into ChatGPT. It might tell you about Loki the TV series. It has been trained on 1000s of webpages describing the Loki TV series and it will produce an average version of a description of Loki.

But it might choose the less common answer to ‘Tell me about Loki’ and tell you about the norse God Loki. This is caused by something called the ‘temperature’. This parameter helps create more natural sounding responses, less robotic but also creates less accurate content. The temperature parameter tells it use a less common next word rather than the highest ranked next word. These systems are just really really advanced text Autofill next word predictors.

It is just guessing at what the reponse should be and so sometimes it guesses Loki MCU and sometimes it guesses Loki the Norse God.

When these systems make stuff-up, they call it the Machine Learning models hallucinating. They guess at the answer by copying from the examples they have been trained on. They are not thinking in the way a human does.

Generating a police report. Not thinking like a human.

Say you had to write a police report of a crime and a key detail is did they exit the bank they robbed and go right or left. A human would look at the witness statements or other information and write the correct direction in the report. These machine learning tools are not doing the same thing. They would just guess and put left or right, because they are not thinking like a human is thinking they are just Guessing.

So how is AI being used in Architecture?

Firstly tools like MidJourney are being used for design inspiration though the results have to be manualy modeled after to generate 3D. ChatGPT is used by Architects to help write computer code to generate shapes and automate tasks. Then there are the computer software developers creating software like Testfit, that generate building proposals.

Examples of Classical and Generative AI tools in AEC

Below are some videos of different types of classical AI and Gen AI tools currently being used in the AEC sector.

Classical AI Example 1 – Hypar / Hypar is a website where you can create, store, share and deploy algorithms that create buildings or components of buildings.

Classical AI Example 2 – TestFit / Testfit is a software tool designed to allow real estate developers develop their own designs for potential building sites. You draw the outline of the building site over a map in their software. Then the software will create an automatially generated collection of buildings and roads on the site and give you ability to adjust it. This will also tell the real estate developer how many apartments can be fitted on the site, which is why it is called testfit.

Classical AI Example 3 – DynamoBIM / DynamoBIM is a visual programming tool made by autodesk that works inside Revit. You can use it to create complex geometry or automate repatative tasks. Both these abilities help overcome project bottlenecks. Say for example you have a complex facade pattern that is slow to model manually if the size of the building changes then this slow rebuilding of the facade becomes a project bottleneck, DynamoBIM can be used to create this complex facade then if the building size chnages you just re-run the script overcoming the bottleneck.

Classical AI Example 4 – Rhino.Inside / Rhino.Inside is another visual programming language like DynamoBIM but it has more features and can create more complex geometry more easily.

Below are examples of Generative AI tools being used in the AI sector.

Machine Learning Gen AI Example 1 – EvolveLAB AI Render / EvolveLAB are a BIM consultancy in America they have developed an AI render engine that lets you change blocky massing models into realistic renders. I think this will be more useful for early concept generation.

Machine Learning Gen AI Example 2 – MidJourney / This is an awesome AI tool that can create FLAT images of almost anything you think of and more. It has been trained on millions of images of many different things. Say for example you wanted a BatMan branded coffee machine, simply type that into the prompt and it will create a flat image of an amazing design for a batman coffee machine. Even though they look like 3D models they are in fact just flat images.

Machine Learning Gen AI Example 3 – ChatGPT Code Generation / ChatGPT and Google Bard have been trained on billions of lines of text from the internet and books. Their general knowledge is astounding, if you wanted to know what is traditional wedding food in argentina or what is the most famous building in timbuktu then simply ask them, they will know. They are are also amazing at creating computer code in various languages.

Conclusion and recommendations

•These tools are already a major part of the AEC industry. Firms like Zaha Hadid use MidJourney for design inspiration and Fosters & Partners use DynamoBIM in the creation of their Revit models.

•Not everyone wants to learn these tools, so implementation is best by having a small group of specialists who do this work. Like for example Foster’s ‘Specialist Modelling Group’. They can be deployed across different projects to help overcome bottlenecks when needed. They should do presentations about how they work so other staff know when to ask for their help.

•I would suggest focus on Rhino.Inside(Revit) Grasshopper3D. Encourage the use of Rhino and Grasshopper for early concept design and have a small team of specialists that can use these tools that are deployed on different projects across the offices.

•These AI and Visual Programming tools will not help with standardisation of Revit working practices across the offices. But they will help solve project bottlenecks and increase design flare.

•Grasshopper3D is a bigger tool than the Autodesk made DynamoBIM. I would say Grasshopper3D is the future. Encourage use of Rhino Grasshopper for concept design.

•Use MidJourney AI for concept inspiration. And use AI render tools for optioneering using a blocky 3D concept model to control the output.